OP Stack Specification

Table of Contents

About Optimism

Optimism is a project dedicated to scaling Ethereum's technology and expanding its ability to coordinate people from across the world to build effective decentralized economies and governance systems. The Optimism Collective builds open-source software that powers scalable blockchains and aims to address key governance and economic challenges in the wider Ethereum ecosystem. Optimism operates on the principle of impact=profit, the idea that individuals who positively impact the Collective should be proportionally rewarded with profit.

Change the incentives and you change the world.

About the OP Stack

The OP Stack is a decentralized software stack maintained by the OP Stack that forms the backbone of blockchains like OP Mainnet and Base. The OP Stack is designed to be aggressively open-source — you are welcome to explore, modify, and extend the OP Stack to your heart's content.

Site Navigation

Navigate this site using the sidebar on the left, the search icon found at the top of this page, or the left/right navigation buttons found to the sides of each page.

Background

Table of Contents

Overview

The OP Stack is a decentralized software stack maintained by the OP Stack that forms the backbone of blockchains like OP Mainnet and Base. The OP Stack provides the infrastructure for operating EVM equivalent rollup blockchains designed to scale Ethereum while remaining maximally compatible with existing Ethereum infrastructure. This document provides an overview of the protocol to provide context for the rest of the specification.

Foundations

Ethereum Scalability

Scaling Ethereum means increasing the number of useful transactions the Ethereum network can process. Ethereum's limited resources, specifically bandwidth, computation, and storage, constrain the number of transactions which can be processed on the network. Of the three resources, computation and storage are currently the most significant bottlenecks. These bottlenecks limit the supply of transactions, leading to extremely high fees. Scaling ethereum and reducing fees can be achieved by better utilizing bandwidth, computation and storage.

Optimistic Rollups

An Optimistic Rollup is a layer 2 scalability construction which increases the computation & storage capacity of Ethereum while aiming to minimize sacrifices to scalability or decentralization. In a nutshell, an Optimistic Rollup utilizes Ethereum (or some other data availability layer) to host transaction data. Layer 2 nodes then execute a state transition function over this data. Users can propose the result of this off-chain execution to a smart contract on L1. A "fault proving" process can then demonstrate that a user's proposal is (or is not) valid.

EVM Equivalence

EVM Equivalence is complete compliance with the state transition function described in the Ethereum yellow paper, the formal definition of the protocol. By conforming to the Ethereum standard across EVM equivalent rollups, smart contract developers can write once and deploy anywhere.

Protocol Guarantees

We strive to preserve three critical properties: liveness, validity, and availability. A protocol that can maintain these properties can, effectively, scale Ethereum without sacrificing security.

Liveness

Liveness is defined as the ability for any party to be able to extend the rollup chain by including a transaction within a bounded amount of time. It should not be possible for an actor to block the inclusion of any given transaction for more than this bounded time period. This bounded time period should also be acceptable such that inclusion is not just theoretically possible but practically useful.

Validity

Validity is defined as the ability for any party to execute the rollup state transition function, subject to certain lower bound expectations for available computing and bandwidth resources. Validity is also extended to refer to the ability for a smart contract on Ethereum to be able to validate this state transition function economically.

Availability

Availability is defined as the ability for any party to retrieve the inputs that are necessary to execute the rollup state transition function correctly. Availability is essentially an element of validity and is required to be able to guarantee validity in general. Similar to validity, availability is subject to lower bound resource requirements.

Network Participants

Generally speaking, there are three primary actors that interact with an OP Stack chain: users, sequencers, and verifiers.

graph TD

EthereumL1(Ethereum L1)

subgraph "L2 Participants"

Users(Users)

Sequencers(Sequencers)

Verifiers(Verifiers)

end

Verifiers -.->|fetch transaction batches| EthereumL1

Verifiers -.->|fetch deposit data| EthereumL1

Verifiers -->|submit/validate/challenge output proposals| EthereumL1

Verifiers -.->|fetch realtime P2P updates| Sequencers

Users -->|submit deposits/withdrawals| EthereumL1

Users -->|submit transactions| Sequencers

Users -->|query data| Verifiers

Sequencers -->|submit transaction batches| EthereumL1

Sequencers -.->|fetch deposit data| EthereumL1

classDef l1Contracts stroke:#bbf,stroke-width:2px;

classDef l2Components stroke:#333,stroke-width:2px;

classDef systemUser stroke:#f9a,stroke-width:2px;

class EthereumL1 l1Contracts;

class Users,Sequencers,Verifiers l2Components;

Users

Users are the general class of network participants who:

- Submit transactions through a Sequencer or by interacting with contracts on Ethereum.

- Query transaction data from interfaces operated by verifiers.

Sequencers

Sequencers fill the role of the block producer on an OP Stack chain. Chains may have a single Sequencer or may choose to utilize some consensus protocol that coordinates multiple Sequencers. The OP Stack currently officially only supports a single active Sequencer at any given time. In general, specifications may use the term "the Sequencer" as a stand-in for either a single Sequencer or a consensus protocol of multiple Sequencers.

The Sequencer:

- Accepts transactions directly from Users.

- Observes "deposit" transactions generated on Ethereum.

- Consolidates both transaction streams into ordered L2 blocks.

- Submits information to L1 that is sufficient to fully reproduce those L2 blocks.

- Provides real-time access to pending L2 blocks that have not yet been confirmed on L1.

The Sequencer serves an important role for the operation of an L2 chain but is not a trusted actor. The Sequencer is generally responsible for improving the user experience by ordering transactions much more quickly and cheaply than would currently be possible if users were to submit all transactions directly to L1.

Verifiers

Verifiers download and execute the L2 state transition function independently of the Sequencer. Verifiers help to maintain the integrity of the network and serve blockchain data to Users.

Verifiers generally:

- Download rollup data from L1 and the Sequencer.

- Use rollup data to execute the L2 state transition function.

- Serve rollup data and computed L2 state information to Users.

Verifiers can also act as Proposers and/or Challengers who:

- Submit assertions about the state of the L2 to a smart contract on L1.

- Validate assertions made by other participants.

- Dispute invalid assertions made by other participants.

Key Interaction Diagrams

Depositing and Sending Transactions

Users will often begin their L2 journey by depositing ETH from L1. Once they have ETH to pay fees, they'll start sending transactions on L2. The following diagram demonstrates this interaction and all key OP Stack components which are or should be utilized:

graph TD

subgraph "Ethereum L1"

OptimismPortal(<a href="./protocol/withdrawals.html#the-optimism-portal-contract">OptimismPortal</a>)

BatchInbox(<a href="../glossary.html#batcher-transaction">Batch Inbox Address</a>)

end

Sequencer(Sequencer)

Users(Users)

%% Interactions

Users -->|<b>1.</b> submit deposit| OptimismPortal

Sequencer -.->|<b>2.</b> fetch deposit events| OptimismPortal

Sequencer -->|<b>3.</b> generate deposit block| Sequencer

Users -->|<b>4.</b> send transactions| Sequencer

Sequencer -->|<b>5.</b> submit transaction batches| BatchInbox

classDef l1Contracts stroke:#bbf,stroke-width:2px;

classDef l2Components stroke:#333,stroke-width:2px;

classDef systemUser stroke:#f9a,stroke-width:2px;

class OptimismPortal,BatchInbox l1Contracts;

class Sequencer l2Components;

class Users systemUser;

Withdrawing

Users may also want to withdraw ETH or ERC20 tokens from an OP Stack chain back to Ethereum. Withdrawals are initiated

as standard transactions on L2 but are then completed using transactions on L1. Withdrawals must reference a valid

FaultDisputeGame contract that proposes the state of the L2 at a given point in time.

graph LR

subgraph "Ethereum L1"

BatchInbox(<a href="../glossary.html#batcher-transaction">Batch Inbox Address</a>)

DisputeGameFactory(<a href="../fault-proof/stage-one/dispute-game-interface.html#disputegamefactory-interface">DisputeGameFactory</a>)

FaultDisputeGame(<a href="../fault-proof/stage-one/fault-dispute-game.html">FaultDisputeGame</a>)

OptimismPortal(<a href="./protocol/withdrawals.html#the-optimism-portal-contract">OptimismPortal</a>)

ExternalContracts(External Contracts)

end

Sequencer(Sequencer)

Proposers(Proposers)

Users(Users)

%% Interactions

Users -->|<b>1.</b> send withdrawal initialization txn| Sequencer

Sequencer -->|<b>2.</b> submit transaction batch| BatchInbox

Proposers -->|<b>3.</b> submit output proposal| DisputeGameFactory

DisputeGameFactory -->|<b>4.</b> generate game| FaultDisputeGame

Users -->|<b>5.</b> submit withdrawal proof| OptimismPortal

Users -->|<b>6.</b> wait for finalization| FaultDisputeGame

Users -->|<b>7.</b> submit withdrawal finalization| OptimismPortal

OptimismPortal -->|<b>8.</b> check game validity| FaultDisputeGame

OptimismPortal -->|<b>9.</b> execute withdrawal transaction| ExternalContracts

%% Styling

classDef l1Contracts stroke:#bbf,stroke-width:2px;

classDef l2Components stroke:#333,stroke-width:2px;

classDef systemUser stroke:#f9a,stroke-width:2px;

class BatchInbox,DisputeGameFactory,FaultDisputeGame,OptimismPortal l1Contracts;

class Sequencer l2Components;

class Users,Proposers systemUser;

Next Steps

Check out the sidebar to the left to find any specification you might want to read, or click one of the links embedded in one of the above diagrams to learn about particular components that have been mentioned.

Optimism Overview

Table of Contents

This document is a high-level technical overview of the Optimism protocol. It aims to explain how the protocol works in an informal manner, and direct readers to other parts of the specification so that they may learn more.

This document assumes you've read the background.

Architecture Design Goals

- Execution-Level EVM Equivalence: The developer experience should be identical to L1 except where L2 introduces a

fundamental difference.

- No special compiler.

- No unexpected gas costs.

- Transaction traces work out-of-the-box.

- All existing Ethereum tooling works - all you have to do is change the chain ID.

- Maximal compatibility with ETH1 nodes: The implementation should minimize any differences with a vanilla Geth

node, and leverage as many existing L1 standards as possible.

- The execution engine/rollup node uses the ETH2 Engine API to build the canonical L2 chain.

- The execution engine leverages Geth's existing mempool and sync implementations, including snap sync.

- Minimize state and complexity:

- Whenever possible, services contributing to the rollup infrastructure are stateless.

- Stateful services can recover to full operation from a fresh DB using the peer-to-peer network and on-chain sync mechanisms.

- Running a replica is as simple as running a Geth node.

Architecture Overview

Core L1 Smart Contracts

Below you'll find an architecture diagram describing the core L1 smart contracts for the OP Stack. Smart contracts that are considered "peripheral" and not core to the operation of the OP Stack system are described separately.

graph LR

subgraph "External Contracts"

ExternalERC20(External ERC20 Contracts)

ExternalERC721(External ERC721 Contracts)

end

subgraph "L1 Smart Contracts"

BatchDataEOA(<a href="../glossary.html#batcher-transaction">Batch Inbox Address</a>)

L1StandardBridge(<a href="./bridges.html">L1StandardBridge</a>)

L1ERC721Bridge(<a href="./bridges.html">L1ERC721Bridge</a>)

L1CrossDomainMessenger(<a href="./messengers.html">L1CrossDomainMessenger</a>)

OptimismPortal(<a href="./withdrawals.html#the-optimism-portal-contract">OptimismPortal</a>)

SuperchainConfig(<a href="./superchain-config.html">SuperchainConfig</a>)

SystemConfig(<a href="./system-config.html">SystemConfig</a>)

DisputeGameFactory(<a href="../fault-proof/stage-one/dispute-game-interface.html#disputegamefactory-interface">DisputeGameFactory</a>)

FaultDisputeGame(<a href="../fault-proof/stage-one/fault-dispute-game.html">FaultDisputeGame</a>)

AnchorStateRegistry(<a href="../fault-proof/stage-one/fault-dispute-game.html#anchor-state-registry">AnchorStateRegistry</a>)

DelayedWETH(<a href="../fault-proof/stage-one/bond-incentives.html#delayedweth#de">DelayedWETH</a>)

end

subgraph "User Interactions (Permissionless)"

Users(Users)

Challengers(Challengers)

end

subgraph "System Interactions"

Guardian(Guardian)

Batcher(<a href="./batcher.html">Batcher</a>)

end

subgraph "Layer 2 Interactions"

L2Nodes(Layer 2 Nodes)

end

L2Nodes -.->|fetch transaction batches| BatchDataEOA

L2Nodes -.->|fetch deposit events| OptimismPortal

Batcher -->|publish transaction batches| BatchDataEOA

ExternalERC20 <-->|mint/burn/transfer tokens| L1StandardBridge

ExternalERC721 <-->|mint/burn/transfer tokens| L1ERC721Bridge

L1StandardBridge <-->|send/receive messages| L1CrossDomainMessenger

L1StandardBridge -.->|query pause state| SuperchainConfig

L1ERC721Bridge <-->|send/receive messages| L1CrossDomainMessenger

L1ERC721Bridge -.->|query pause state| SuperchainConfig

L1CrossDomainMessenger <-->|send/receive messages| OptimismPortal

L1CrossDomainMessenger -.->|query pause state| SuperchainConfig

OptimismPortal -.->|query pause state| SuperchainConfig

OptimismPortal -.->|query config| SystemConfig

OptimismPortal -.->|query state proposals| DisputeGameFactory

DisputeGameFactory -->|generate instances| FaultDisputeGame

FaultDisputeGame -->|store bonds| DelayedWETH

FaultDisputeGame -->|query/update anchor states| AnchorStateRegistry

Users <-->|deposit/withdraw ETH/ERC20s| L1StandardBridge

Users <-->|deposit/withdraw ERC721s| L1ERC721Bridge

Users -->|prove/execute withdrawals| OptimismPortal

Challengers -->|propose output roots| DisputeGameFactory

Challengers -->|verify/challenge/defend proposals| FaultDisputeGame

Guardian -->|pause/unpause| SuperchainConfig

Guardian -->|safety net actions| OptimismPortal

Guardian -->|safety net actions| DisputeGameFactory

Guardian -->|safety net actions| DelayedWETH

classDef extContracts stroke:#ff9,stroke-width:2px;

classDef l1Contracts stroke:#bbf,stroke-width:2px;

classDef l1EOA stroke:#bbb,stroke-width:2px;

classDef userInt stroke:#f9a,stroke-width:2px;

classDef systemUser stroke:#f9a,stroke-width:2px;

classDef l2Nodes stroke:#333,stroke-width:2px

class ExternalERC20,ExternalERC721 extContracts;

class L1StandardBridge,L1ERC721Bridge,L1CrossDomainMessenger,OptimismPortal,SuperchainConfig,SystemConfig,DisputeGameFactory,FaultDisputeGame,DelayedWETH,AnchorStateRegistry l1Contracts;

class BatchDataEOA l1EOA;

class Users,Challengers userInt;

class Batcher,Guardian systemUser;

class L2Nodes l2Nodes;

Notes for Core L1 Smart Contracts

- The

Batch Inbox Addressdescribed above (highlighted in GREY) is not a smart contract and is instead an arbitrarily selected account that is assumed to have no known private key. The convention for deriving this account's address is provided on the Configurability page.- Historically, it was often derived as

0xFF0000....<L2 chain ID>where<L2 chain ID>is chain ID of the Layer 2 network for which the data is being posted. This is why many chains, such as OP Mainnet, have a batch inbox address of this form.

- Historically, it was often derived as

- Smart contracts that sit behind

Proxycontracts are highlighted in BLUE. Refer to the Smart Contract Proxies section below to understand how these proxies are designed.- The

L1CrossDomainMessengercontract sits behind theResolvedDelegateProxycontract, a legacy proxy contract type used within older versions of the OP Stack. This proxy type is used exclusively for theL1CrossDomainMessengerto maintain backwards compatibility. - The

L1StandardBridgecontract sits behind theL1ChugSplashProxycontract, a legacy proxy contract type used within older versions of the OP Stack. This proxy type is used exclusively for theL1StandardBridgecontract to maintain backwards compatibility.

- The

Core L2 Smart Contracts

Here you'll find an architecture diagram describing the core OP Stack smart contracts that exist natively on the L2 chain itself.

graph LR

subgraph "Layer 1 (Ethereum)"

L1SmartContracts(L1 Smart Contracts)

end

subgraph "L2 Client"

L2Node(L2 Node)

end

subgraph "L2 System Contracts"

L1Block(<a href="./predeploys.html#l1block">L1Block</a>)

GasPriceOracle(<a href="./predeploys.html#gaspriceoracle">GasPriceOracle</a>)

L1FeeVault(<a href="./predeploys.html#l1feevault">L1FeeVault</a>)

BaseFeeVault(<a href="./predeploys.html#basefeevault">BaseFeeVault</a>)

SequencerFeeVault(<a href="./predeploys.html#sequencerfeevault">SequencerFeeVault</a>)

end

subgraph "L2 Bridge Contracts"

L2CrossDomainMessenger(<a href="./predeploys.html#l2crossdomainmessenger">L2CrossDomainMessenger</a>)

L2ToL1MessagePasser(<a href="./predeploys.html#l2tol1messagepasser">L2ToL1MessagePasser</a>)

L2StandardBridge(<a href="./predeploys.html#l2standardbridge">L2StandardBridge</a>)

L2ERC721Bridge(<a href="./predeploys.html">L2ERC721Bridge</a>)

end

subgraph "Transactions"

DepositTransaction(Deposit Transaction)

UserTransaction(User Transaction)

end

subgraph "External Contracts"

ExternalERC20(External ERC20 Contracts)

ExternalERC721(External ERC721 Contracts)

end

subgraph "Remaining L2 Universe"

OtherContracts(Any Contracts and Addresses)

end

L2Node -.->|derives chain from| L1SmartContracts

L2Node -->|updates| L1Block

L2Node -->|distributes fees to| L1FeeVault

L2Node -->|distributes fees to| BaseFeeVault

L2Node -->|distributes fees to| SequencerFeeVault

L2Node -->|derives from deposits| DepositTransaction

L2Node -->|derives from chain data| UserTransaction

UserTransaction -->|can trigger| OtherContracts

DepositTransaction -->|maybe triggers| L2CrossDomainMessenger

DepositTransaction -->|can trigger| OtherContracts

ExternalERC20 <-->|mint/burn/transfer| L2StandardBridge

ExternalERC721 <-->|mint/burn/transfer| L2ERC721Bridge

L2StandardBridge <-->|sends/receives messages| L2CrossDomainMessenger

L2ERC721Bridge <-->|sends/receives messages| L2CrossDomainMessenger

GasPriceOracle -.->|queries| L1Block

L2CrossDomainMessenger -->|sends messages| L2ToL1MessagePasser

classDef extContracts stroke:#ff9,stroke-width:2px;

classDef l2Contracts stroke:#bbf,stroke-width:2px;

classDef transactions stroke:#fba,stroke-width:2px;

classDef l2Node stroke:#f9a,stroke-width:2px;

class ExternalERC20,ExternalERC721 extContracts;

class L2CrossDomainMessenger,L2ToL1MessagePasser,L2StandardBridge,L2ERC721Bridge l2Contracts;

class L1Block,L1FeeVault,BaseFeeVault,SequencerFeeVault,GasPriceOracle l2Contracts;

class UserTransaction,DepositTransaction transactions;

class L2Node l2Node;

Notes for Core L2 Smart Contracts

- Contracts highlighted as "L2 System Contracts" are updated or mutated automatically as part of the chain derivation

process. Users typically do not mutate these contracts directly, except in the case of the

FeeVaultcontracts where any user may trigger a withdrawal of collected fees to the pre-determined withdrawal address. - Smart contracts that sit behind

Proxycontracts are highlighted in BLUE. Refer to the Smart Contract Proxies section below to understand how these proxies are designed. - User interactions for the "L2 Bridge Contracts" have been omitted from this diagram but largely follow the same user interactions described in the architecture diagram for the Core L1 Smart Contracts.

Smart Contract Proxies

Most OP Stack smart contracts sit behind Proxy contracts that are managed by a ProxyAdmin contract.

The ProxyAdmin contract is controlled by some owner address that can be any EOA or smart contract.

Below you'll find a diagram that explains the behavior of the typical proxy contract.

graph LR

ProxyAdminOwner(Proxy Admin Owner)

ProxyAdmin(<a href="https://github.com/ethereum-optimism/optimism/blob/develop/packages/contracts-bedrock/src/universal/ProxyAdmin.sol">ProxyAdmin</a>)

subgraph "Logical Smart Contract"

Proxy(<a href="https://github.com/ethereum-optimism/optimism/blob/develop/packages/contracts-bedrock/src/universal/Proxy.sol">Proxy</a>)

Implementation(Implementation)

end

ProxyAdminOwner -->|manages| ProxyAdmin

ProxyAdmin -->|upgrades| Proxy

Proxy -->|delegatecall| Implementation

classDef l1Contracts stroke:#bbf,stroke-width:2px;

classDef systemUser stroke:#f9a,stroke-width:2px;

class Proxy l1Contracts;

class ProxyAdminOwner systemUser;

L2 Node Components

Below you'll find a diagram illustrating the basic interactions between the components that make up an L2 node as well as demonstrations of how different actors use these components to fulfill their roles.

graph LR

subgraph "L2 Node"

RollupNode(<a href="./rollup-node.html">Rollup Node</a>)

ExecutionEngine(<a href="./exec-engine.html">Execution Engine</a>)

end

subgraph "System Interactions"

BatchSubmitter(<a href="./batcher.html">Batch Submitter</a>)

OutputSubmitter(Output Submitter)

Challenger(Challenger)

end

subgraph "L1 Smart Contracts"

BatchDataEOA(<a href="../glossary.html#batcher-transaction">Batch Inbox Address</a>)

OptimismPortal(<a href="./withdrawals.html#the-optimism-portal-contract">OptimismPortal</a>)

DisputeGameFactory(<a href="../fault-proof/stage-one/dispute-game-interface.html#disputegamefactory-interface">DisputeGameFactory</a>)

FaultDisputeGame(<a href="../fault-proof/stage-one/fault-dispute-game.html">FaultDisputeGame</a>)

end

BatchSubmitter -.->|fetch transaction batch info| RollupNode

BatchSubmitter -.->|fetch transaction batch info| ExecutionEngine

BatchSubmitter -->|send transaction batches| BatchDataEOA

RollupNode -.->|fetch transaction batches| BatchDataEOA

RollupNode -.->|fetch deposit transactions| OptimismPortal

RollupNode -->|drives| ExecutionEngine

OutputSubmitter -.->|fetch outputs| RollupNode

OutputSubmitter -->|send output proposals| DisputeGameFactory

Challenger -.->|fetch dispute games| DisputeGameFactory

Challenger -->|verify/challenge/defend games| FaultDisputeGame

classDef l2Components stroke:#333,stroke-width:2px;

classDef systemUser stroke:#f9a,stroke-width:2px;

classDef l1Contracts stroke:#bbf,stroke-width:2px;

class RollupNode,ExecutionEngine l2Components;

class BatchSubmitter,OutputSubmitter,Challenger systemUser;

class BatchDataEOA,OptimismPortal,DisputeGameFactory,FaultDisputeGame l1Contracts;

Transaction/Block Propagation

Spec links:

Since the EE uses Geth under the hood, Optimism uses Geth's built-in peer-to-peer network and transaction pool to propagate transactions. The same network can also be used to propagate submitted blocks and support snap-sync.

Unsubmitted blocks, however, are propagated using a separate peer-to-peer network of Rollup Nodes. This is optional, however, and is provided as a convenience to lower latency for verifiers and their JSON-RPC clients.

The below diagram illustrates how the sequencer and verifiers fit together:

Key Interactions In Depth

Deposits

Spec links:

Optimism supports two types of deposits: user deposits, and L1 attributes deposits. To perform a user deposit, users

call the depositTransaction method on the OptimismPortal contract. This in turn emits TransactionDeposited events,

which the rollup node reads during block derivation.

L1 attributes deposits are used to register L1 block attributes (number, timestamp, etc.) on L2 via a call to the L1 Attributes Predeploy. They cannot be initiated by users, and are instead added to L2 blocks automatically by the rollup node.

Both deposit types are represented by a single custom EIP-2718 transaction type on L2.

Block Derivation

Overview

The rollup chain can be deterministically derived given an L1 Ethereum chain. The fact that the entire rollup chain can be derived based on L1 blocks is what makes Optimism a rollup. This process can be represented as:

derive_rollup_chain(l1_blockchain) -> rollup_blockchain

Optimism's block derivation function is designed such that it:

- Requires no state other than what is easily accessible using L1 and L2 execution engine APIs.

- Supports sequencers and sequencer consensus.

- Is resilient to sequencer censorship.

Epochs and the Sequencing Window

The rollup chain is subdivided into epochs. There is a 1:1 correspondence between L1 block numbers and epoch numbers.

For L1 block number n, there is a corresponding rollup epoch n which can only be derived after a sequencing window

worth of blocks has passed, i.e. after L1 block number n + SEQUENCING_WINDOW_SIZE is added to the L1 chain.

Each epoch contains at least one block. Every block in the epoch contains an L1 info transaction which contains

contextual information about L1 such as the block hash and timestamp. The first block in the epoch also contains all

deposits initiated via the OptimismPortal contract on L1. All L2 blocks can also contain sequenced transactions,

i.e. transactions submitted directly to the sequencer.

Whenever the sequencer creates a new L2 block for a given epoch, it must submit it to L1 as part of a batch, within

the epoch's sequencing window (i.e. the batch must land before L1 block n + SEQUENCING_WINDOW_SIZE). These batches are

(along with the TransactionDeposited L1 events) what allows the derivation of the L2 chain from the L1 chain.

The sequencer does not need for a L2 block to be batch-submitted to L1 in order to build on top of it. In fact, batches typically contain multiple L2 blocks worth of sequenced transactions. This is what enables fast transaction confirmations on the sequencer.

Since transaction batches for a given epoch can be submitted anywhere within the sequencing window, verifiers must search all blocks within the window for transaction batches. This protects against the uncertainty of transaction inclusion of L1. This uncertainty is also why we need the sequencing window in the first place: otherwise the sequencer could retroactively add blocks to an old epoch, and validators wouldn't know when they can finalize an epoch.

The sequencing window also prevents censorship by the sequencer: deposits made on a given L1 block will be included in

the L2 chain at worst after SEQUENCING_WINDOW_SIZE L1 blocks have passed.

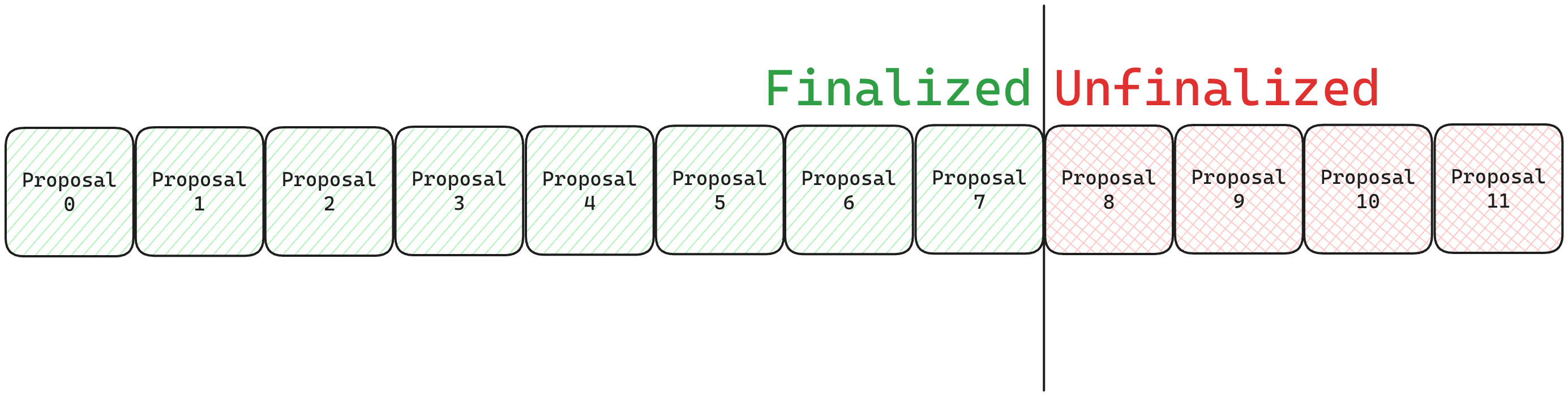

The following diagram describes this relationship, and how L2 blocks are derived from L1 blocks (L1 info transactions have been elided):

Block Derivation Loop

A sub-component of the rollup node called the rollup driver is actually responsible for performing block derivation. The rollup driver is essentially an infinite loop that runs the block derivation function. For each epoch, the block derivation function performs the following steps:

- Downloads deposit and transaction batch data for each block in the sequencing window.

- Converts the deposit and transaction batch data into payload attributes for the Engine API.

- Submits the payload attributes to the Engine API, where they are converted into blocks and added to the canonical chain.

This process is then repeated with incrementing epochs until the tip of L1 is reached.

Engine API

The rollup driver doesn't actually create blocks. Instead, it directs the execution engine to do so via the Engine API. For each iteration of the block derivation loop described above, the rollup driver will craft a payload attributes object and send it to the execution engine. The execution engine will then convert the payload attributes object into a block, and add it to the chain. The basic sequence of the rollup driver is as follows:

- Call fork choice updated with the payload attributes object. We'll skip over the details of the

fork choice state parameter for now - just know that one of its fields is the L2 chain's

headBlockHash, and that it is set to the block hash of the tip of the L2 chain. The Engine API returns a payload ID. - Call get payload with the payload ID returned in step 1. The engine API returns a payload object that includes a block hash as one of its fields.

- Call new payload with the payload returned in step 2. (Ecotone blocks, must use V3, pre-Ecotone blocks MUST use the V2 version)

- Call fork choice updated with the fork choice parameter's

headBlockHashset to the block hash returned in step 2. The tip of the L2 chain is now the block created in step 1.

The swimlane diagram below visualizes the process:

Standard Bridges

Table of Contents

Overview

The standard bridges are responsible for allowing cross domain ETH and ERC20 token transfers. They are built on top of the cross domain messenger contracts and give a standard interface for depositing tokens.

The bridge works for both L1 native tokens and L2 native tokens. The legacy API

is preserved to ensure that existing applications will not experience any

problems with the Bedrock StandardBridge contracts.

The L2StandardBridge is a predeploy contract located at

0x4200000000000000000000000000000000000010.

interface StandardBridge {

event ERC20BridgeFinalized(address indexed localToken, address indexed remoteToken, address indexed from, address to, uint256 amount, bytes extraData);

event ERC20BridgeInitiated(address indexed localToken, address indexed remoteToken, address indexed from, address to, uint256 amount, bytes extraData);

event ETHBridgeFinalized(address indexed from, address indexed to, uint256 amount, bytes extraData);

event ETHBridgeInitiated(address indexed from, address indexed to, uint256 amount, bytes extraData);

function bridgeERC20(address _localToken, address _remoteToken, uint256 _amount, uint32 _minGasLimit, bytes memory _extraData) external;

function bridgeERC20To(address _localToken, address _remoteToken, address _to, uint256 _amount, uint32 _minGasLimit, bytes memory _extraData) external;

function bridgeETH(uint32 _minGasLimit, bytes memory _extraData) payable external;

function bridgeETHTo(address _to, uint32 _minGasLimit, bytes memory _extraData) payable external;

function deposits(address, address) view external returns (uint256);

function finalizeBridgeERC20(address _localToken, address _remoteToken, address _from, address _to, uint256 _amount, bytes memory _extraData) external;

function finalizeBridgeETH(address _from, address _to, uint256 _amount, bytes memory _extraData) payable external;

function messenger() view external returns (address);

function OTHER_BRIDGE() view external returns (address);

}

Token Depositing

The bridgeERC20 function is used to send a token from one domain to another

domain. An OptimismMintableERC20 token contract must exist on the remote

domain to be able to deposit tokens to that domain. One of these tokens can be

deployed using the OptimismMintableERC20Factory contract.

Upgradability

Both the L1 and L2 standard bridges should be behind upgradable proxies.

Cross Domain Messengers

Table of Contents

Overview

The cross domain messengers are responsible for providing a higher level API for developers who are interested in sending cross domain messages. They allow for the ability to replay cross domain messages and sit directly on top of the lower level system contracts responsible for cross domain messaging on L1 and L2.

The CrossDomainMessenger is extended to create both an

L1CrossDomainMessenger as well as a L2CrossDomainMessenger.

These contracts are then extended with their legacy APIs to provide backwards

compatibility for applications that integrated before the Bedrock system

upgrade.

The L2CrossDomainMessenger is a predeploy contract located at

0x4200000000000000000000000000000000000007.

The base CrossDomainMessenger interface is:

interface CrossDomainMessenger {

event FailedRelayedMessage(bytes32 indexed msgHash);

event RelayedMessage(bytes32 indexed msgHash);

event SentMessage(address indexed target, address sender, bytes message, uint256 messageNonce, uint256 gasLimit);

event SentMessageExtension1(address indexed sender, uint256 value);

function MESSAGE_VERSION() external view returns (uint16);

function MIN_GAS_CALLDATA_OVERHEAD() external view returns (uint64);

function MIN_GAS_CONSTANT_OVERHEAD() external view returns (uint64);

function MIN_GAS_DYNAMIC_OVERHEAD_DENOMINATOR() external view returns (uint64);

function MIN_GAS_DYNAMIC_OVERHEAD_NUMERATOR() external view returns (uint64);

function OTHER_MESSENGER() external view returns (address);

function baseGas(bytes memory _message, uint32 _minGasLimit) external pure returns (uint64);

function failedMessages(bytes32) external view returns (bool);

function messageNonce() external view returns (uint256);

function relayMessage(

uint256 _nonce,

address _sender,

address _target,

uint256 _value,

uint256 _minGasLimit,

bytes memory _message

) external payable returns (bytes memory returnData_);

function sendMessage(address _target, bytes memory _message, uint32 _minGasLimit) external payable;

function successfulMessages(bytes32) external view returns (bool);

function xDomainMessageSender() external view returns (address);

}

Message Passing

The sendMessage function is used to send a cross domain message. To trigger

the execution on the other side, the relayMessage function is called.

Successful messages have their hash stored in the successfulMessages mapping

while unsuccessful messages have their hash stored in the failedMessages

mapping.

The user experience when sending from L1 to L2 is a bit different than when

sending a transaction from L2 to L1. When going from L1 into L2, the user does

not need to call relayMessage on L2 themselves. The user pays for L2 gas on L1

and the transaction is automatically pulled into L2 where it is executed on L2.

When going from L2 into L1, the user proves their withdrawal on OptimismPortal,

then waits for the finalization window to pass, and then finalizes the withdrawal

on the OptimismPortal, which calls relayMessage on the

L1CrossDomainMessenger to finalize the withdrawal.

Upgradability

The L1 and L2 cross domain messengers should be deployed behind upgradable proxies. This will allow for updating the message version.

Message Versioning

Messages are versioned based on the first 2 bytes of their nonce. Depending on

the version, messages can have a different serialization and hashing scheme.

The first two bytes of the nonce are reserved for version metadata because

a version field was not originally included in the messages themselves, but

a uint256 nonce is so large that we can very easily pack additional data

into that field.

Message Version 0

abi.encodeWithSignature(

"relayMessage(address,address,bytes,uint256)",

_target,

_sender,

_message,

_messageNonce

);

Message Version 1

abi.encodeWithSignature(

"relayMessage(uint256,address,address,uint256,uint256,bytes)",

_nonce,

_sender,

_target,

_value,

_gasLimit,

_data

);

Backwards Compatibility Notes

An older version of the messenger contracts had the concept of blocked messages

in a blockedMessages mapping. This functionality was removed from the

messengers because a smart attacker could get around any message blocking

attempts. It also saves gas on finalizing withdrawals.

The concept of a "relay id" and the relayedMessages mapping was removed.

It was built as a way to be able to fund third parties who relayed messages

on the behalf of users, but it was improperly implemented as it was impossible

to know if the relayed message actually succeeded.

Deposits

Table of Contents

- Overview

- The Deposited Transaction Type

- Deposit Receipt

- L1 Attributes Deposited Transaction

- Special Accounts on L2

- User-Deposited Transactions

Overview

Deposited transactions, also known as deposits are transactions which are initiated on L1, and executed on L2. This document outlines a new transaction type for deposits. It also describes how deposits are initiated on L1, along with the authorization and validation conditions on L2.

Vocabulary note: deposited transaction refers specifically to an L2 transaction, while deposit can refer to the transaction at various stages (for instance when it is deposited on L1).

The Deposited Transaction Type

Deposited transactions have the following notable distinctions from existing transaction types:

- They are derived from Layer 1 blocks, and must be included as part of the protocol.

- They do not include signature validation (see User-Deposited Transactions for the rationale).

- They buy their L2 gas on L1 and, as such, the L2 gas is not refundable.

We define a new EIP-2718 compatible transaction type with the prefix 0x7E to represent a deposit transaction.

A deposit has the following fields (rlp encoded in the order they appear here):

bytes32 sourceHash: the source-hash, uniquely identifies the origin of the deposit.address from: The address of the sender account.address to: The address of the recipient account, or the null (zero-length) address if the deposited transaction is a contract creation.uint256 mint: The ETH value to mint on L2.uint256 value: The ETH value to send to the recipient account.uint64 gas: The gas limit for the L2 transaction.bool isSystemTx: If true, the transaction does not interact with the L2 block gas pool.- Note: boolean is disabled (enforced to be

false) starting from the Regolith upgrade.

- Note: boolean is disabled (enforced to be

bytes data: The calldata.

In contrast to EIP-155 transactions, this transaction type:

- Does not include a

nonce, since it is identified by thesourceHash. API responses still include anonceattribute:- Before Regolith: the

nonceis always0 - With Regolith: the

nonceis set to thedepositNonceattribute of the corresponding transaction receipt.

- Before Regolith: the

- Does not include signature information, and makes the

fromaddress explicit. API responses contain zeroed signaturev,r,svalues for backwards compatibility. - Includes new

sourceHash,from,mint, andisSystemTxattributes. API responses contain these as additional fields.

We select 0x7E because transaction type identifiers are currently allowed to go up to 0x7F.

Picking a high identifier minimizes the risk that the identifier will be used by another

transaction type on the L1 chain in the future. We don't pick 0x7F itself in case it becomes used

for a variable-length encoding scheme.

Source hash computation

The sourceHash of a deposit transaction is computed based on the origin:

- User-deposited:

keccak256(bytes32(uint256(0)), keccak256(l1BlockHash, bytes32(uint256(l1LogIndex)))). Where thel1BlockHash, andl1LogIndexall refer to the inclusion of the deposit log event on L1.l1LogIndexis the index of the deposit event log in the combined list of log events of the block. - L1 attributes deposited:

keccak256(bytes32(uint256(1)), keccak256(l1BlockHash, bytes32(uint256(seqNumber)))). Wherel1BlockHashrefers to the L1 block hash of which the info attributes are deposited. AndseqNumber = l2BlockNum - l2EpochStartBlockNum, wherel2BlockNumis the L2 block number of the inclusion of the deposit tx in L2, andl2EpochStartBlockNumis the L2 block number of the first L2 block in the epoch. - Upgrade-deposited:

keccak256(bytes32(uint256(2)), keccak256(intent)). Whereintentis a UTF-8 byte string, identifying the upgrade intent.

Without a sourceHash in a deposit, two different deposited transactions could have the same exact hash.

The outer keccak256 hashes the actual uniquely identifying information with a domain,

to avoid collisions between different types of sources.

The Interop derivation spec introduces two additional kinds of system deposits,

with domains 3 and 4.

We do not use the sender's nonce to ensure uniqueness because this would require an extra L2 EVM state read from the execution engine during block-derivation.

Kinds of Deposited Transactions

Although we define only one new transaction type, we can distinguish between two kinds of deposited transactions, based on their positioning in the L2 block:

- The first transaction MUST be a L1 attributes deposited transaction, followed by

- an array of zero-or-more user-deposited transactions

submitted to the deposit feed contract on L1 (called

OptimismPortal). User-deposited transactions are only present in the first block of a L2 epoch.

We only define a single new transaction type in order to minimize modifications to L1 client software, and complexity in general.

Validation and Authorization of Deposited Transactions

As noted above, the deposited transaction type does not include a signature for validation. Rather,

authorization is handled by the L2 chain derivation process, which when correctly

applied will only derive transactions with a from address attested to by the logs of the L1

deposit contract.

Execution

In order to execute a deposited transaction:

First, the balance of the from account MUST be increased by the amount of mint.

This is unconditional, and does not revert on deposit failure.

Then, the execution environment for a deposited transaction is initialized based on the transaction's attributes, in exactly the same manner as it would be for an EIP-155 transaction.

The deposit transaction is processed exactly like a type-2 (EIP-1559) transaction, with the exception of:

- No fee fields are verified: the deposit does not have any, as it pays for gas on L1.

- No

noncefield is verified: the deposit does not have any, it's uniquely identified by itssourceHash. - No access-list is processed: the deposit has no access-list, and it is thus processed as if the access-list is empty.

- No check if

fromis an Externally Owner Account (EOA): the deposit is ensured not to be an EOA through L1 address masking, this may change in future L1 contract-deployments to e.g. enable an account-abstraction like mechanism. - Before the Regolith upgrade:

- The execution output states a non-standard gas usage:

- If

isSystemTxis false: execution output states it usesgasLimitgas. - If

isSystemTxis true: execution output states it uses0gas.

- If

- The execution output states a non-standard gas usage:

- No gas is refunded as ETH. (either by not refunding or utilizing the fact the gas-price of the deposit is

0) - No transaction priority fee is charged. No payment is made to the block fee-recipient.

- No L1-cost fee is charged, as deposits are derived from L1 and do not have to be submitted as data back to it.

- No base fee is charged. The total base fee accounting does not change.

Note that this includes contract-deployment behavior like with regular transactions, and gas metering is the same (with the exception of fee related changes above), including metering of intrinsic gas.

Any non-EVM state-transition error emitted by the EVM execution is processed in a special way:

- It is transformed into an EVM-error:

i.e. the deposit will always be included, but its receipt will indicate a failure

if it runs into a non-EVM state-transition error, e.g. failure to transfer the specified

valueamount of ETH due to insufficient account-balance. - The world state is rolled back to the start of the EVM processing, after the minting part of the deposit.

- The

nonceoffromin the world state is incremented by 1, making the error equivalent to a native EVM failure. Note that a previousnonceincrement may have happened during EVM processing, but this would be rolled back first.

Finally, after the above processing, the execution post-processing runs the same:

i.e. the gas pool and receipt are processed identical to a regular transaction.

Starting with the Regolith upgrade however, the receipt of deposit transactions is extended with an additional

depositNonce value, storing the nonce value of the from sender as registered before the EVM processing.

Note that the gas used as stated by the execution output is subtracted from the gas pool, but this execution output value has special edge cases before the Regolith upgrade.

Note for application developers: because CALLER and ORIGIN are set to from, the

semantics of using the tx.origin == msg.sender check will not work to determine whether

or not a caller is an EOA during a deposit transaction. Instead, the check could only be useful for

identifying the first call in the L2 deposit transaction. However this check does still satisfy

the common case in which developers are using this check to ensure that the CALLER is unable to

execute code before and after the call.

Nonce Handling

Despite the lack of signature validation, we still increment the nonce of the from account when a

deposit transaction is executed. In the context of a deposit-only roll up, this is not necessary

for transaction ordering or replay prevention, however it maintains consistency with the use of

nonces during contract creation. It may also simplify integration with downstream

tooling (such as wallets and block explorers).

Deposit Receipt

Transaction receipts use standard typing as per EIP-2718.

The Deposit transaction receipt type is equal to a regular receipt,

but extended with an optional depositNonce field.

The RLP-encoded consensus-enforced fields are:

postStateOrStatus(standard): this contains the transaction status, see EIP-658.cumulativeGasUsed(standard): gas used in the block thus far, including this transaction.- The actual gas used is derived from the difference in

CumulativeGasUsedwith the previous transaction. - Starting with Regolith, this accounts for the actual gas usage by the deposit, like regular transactions.

- The actual gas used is derived from the difference in

bloom(standard): bloom filter of the transaction logs.logs(standard): log events emitted by the EVM processing.depositNonce(unique extension): Optional field. The deposit transaction persists the nonce used during execution.depositNonceVersion(unique extension): Optional field. The value must be 1 if the field is present- Before Canyon, these

depositNonce&depositNonceVersionfields must always be omitted. - With Canyon, these

depositNonce&depositNonceVersionfields must always be included.

- Before Canyon, these

Starting with Regolith, the receipt API responses utilize the receipt changes for more accurate response data:

- The

depositNonceis included in the receipt JSON data in API responses - For contract-deployments (when

to == null), thedepositNoncehelps derive the correctcontractAddressmeta-data, instead of assuming the nonce was zero. - The

cumulativeGasUsedaccounts for the actual gas usage, as metered in the EVM processing.

L1 Attributes Deposited Transaction

An L1 attributes deposited transaction is a deposit transaction sent to the L1 attributes predeployed contract.

This transaction MUST have the following values:

fromis0xdeaddeaddeaddeaddeaddeaddeaddeaddead0001(the address of the L1 Attributes depositor account)tois0x4200000000000000000000000000000000000015(the address of the L1 attributes predeployed contract).mintis0valueis0gasLimitis set to 150,000,000 prior to the Regolith upgrade, and 1,000,000 after.isSystemTxis set totrueprior to the Regolith upgrade, andfalseafter.datais an encoded call to the L1 attributes predeployed contract that depends on the upgrades that are active (see below).

This system-initiated transaction for L1 attributes is not charged any ETH for its allocated

gasLimit, as it is considered part of state-transition processing.

L1 Attributes Deposited Transaction Calldata

L1 Attributes - Bedrock, Canyon, Delta

The data field of the L1 attributes deposited transaction is an ABI encoded call to the

setL1BlockValues() function with correct values associated with the corresponding L1 block

(cf. reference implementation).

Special Accounts on L2

The L1 attributes deposit transaction involves two special purpose accounts:

- The L1 attributes depositor account

- The L1 attributes predeployed contract

L1 Attributes Depositor Account

The depositor account is an EOA with no known private key. It has the address

0xdeaddeaddeaddeaddeaddeaddeaddeaddead0001. Its value is returned by the CALLER and ORIGIN

opcodes during execution of the L1 attributes deposited transaction.

L1 Attributes Predeployed Contract

A predeployed contract on L2 at address 0x4200000000000000000000000000000000000015, which holds

certain block variables from the corresponding L1 block in storage, so that they may be accessed

during the execution of the subsequent deposited transactions.

The predeploy stores the following values:

- L1 block attributes:

number(uint64)timestamp(uint64)basefee(uint256)hash(bytes32)

sequenceNumber(uint64): This equals the L2 block number relative to the start of the epoch, i.e. the L2 block distance to the L2 block height that the L1 attributes last changed, and reset to 0 at the start of a new epoch.- System configurables tied to the L1 block, see System configuration specification:

batcherHash(bytes32): A versioned commitment to the batch-submitter(s) currently operating.overhead(uint256): The L1 fee overhead to apply to L1 cost computation of transactions in this L2 block.scalar(uint256): The L1 fee scalar to apply to L1 cost computation of transactions in this L2 block.

The contract implements an authorization scheme, such that it only accepts state-changing calls from the depositor account.

The contract has the following solidity interface, and can be interacted with according to the contract ABI specification.

L1 Attributes Predeployed Contract: Reference Implementation

A reference implementation of the L1 Attributes predeploy contract can be found in L1Block.sol.

User-Deposited Transactions

User-deposited transactions are deposited transactions

generated by the L2 Chain Derivation process. The content of each user-deposited

transaction are determined by the corresponding TransactionDeposited event emitted by the

deposit contract on L1.

fromis unchanged from the emitted value (though it may have been transformed to an alias inOptimismPortal, the deposit feed contract).tois any 20-byte address (including the zero address)- In case of a contract creation (cf.

isCreation), this address is set tonull.

- In case of a contract creation (cf.

mintis set to the emitted value.valueis set to the emitted value.gaslimitis unchanged from the emitted value. It must be at least 21000.isCreationis set totrueif the transaction is a contract creation,falseotherwise.datais unchanged from the emitted value. Depending on the value ofisCreationit is handled as either calldata or contract initialization code.isSystemTxis set by the rollup node for certain transactions that have unmetered execution. It isfalsefor user deposited transactions

Deposit Contract

The deposit contract is deployed to L1. Deposited transactions are derived from the values in

the TransactionDeposited event(s) emitted by the deposit contract.

The deposit contract is responsible for maintaining the guaranteed gas market, charging deposits for gas to be used on L2, and ensuring that the total amount of guaranteed gas in a single L1 block does not exceed the L2 block gas limit.

The deposit contract handles two special cases:

- A contract creation deposit, which is indicated by setting the

isCreationflag totrue. In the event that thetoaddress is non-zero, the contract will revert. - A call from a contract account, in which case the

fromvalue is transformed to its L2 alias.

Address Aliasing

If the caller is a contract, the address will be transformed by adding

0x1111000000000000000000000000000000001111 to it. The math is unchecked and done on a

Solidity uint160 so the value will overflow. This prevents attacks in which a

contract on L1 has the same address as a contract on L2 but doesn't have the same code. We can safely ignore this

for EOAs because they're guaranteed to have the same "code" (i.e. no code at all). This also makes

it possible for users to interact with contracts on L2 even when the Sequencer is down.

Deposit Contract Implementation: Optimism Portal

A reference implementation of the deposit contract can be found in OptimismPortal.sol.

Withdrawals

Table of Contents

- Overview

- Withdrawal Flow

- The L2ToL1MessagePasser Contract

- The Optimism Portal Contract

- Withdrawal Verification and Finalization

- Security Considerations

Overview

Withdrawals are cross domain transactions which are initiated on L2, and finalized by a transaction executed on L1. Notably, withdrawals may be used by an L2 account to call an L1 contract, or to transfer ETH from an L2 account to an L1 account.

Vocabulary note: withdrawal can refer to the transaction at various stages of the process, but we introduce more specific terms to differentiate:

- A withdrawal initiating transaction refers specifically to a transaction on L2 sent to the Withdrawals predeploy.

- A withdrawal proving transaction refers specifically to an L1 transaction which proves the withdrawal is correct (that it has been included in a merkle tree whose root is available on L1).

- A withdrawal finalizing transaction refers specifically to an L1 transaction which finalizes and relays the withdrawal.

Withdrawals are initiated on L2 via a call to the Message Passer predeploy contract, which records the important

properties of the message in its storage.

Withdrawals are proven on L1 via a call to the OptimismPortal, which proves the inclusion of this withdrawal message.

Withdrawals are finalized on L1 via a call to the OptimismPortal contract,

which verifies that the fault challenge period has passed since the withdrawal message has been proved.

In this way, withdrawals are different from deposits which make use of a special transaction type in the execution engine client. Rather, withdrawals transaction must use smart contracts on L1 for finalization.

Withdrawal Flow

We first describe the end to end flow of initiating and finalizing a withdrawal:

On L2

An L2 account sends a withdrawal message (and possibly also ETH) to the L2ToL1MessagePasser predeploy contract.

This is a very simple contract that stores the hash of the withdrawal data.

On L1

- A relayer submits a withdrawal proving transaction with the required inputs

to the

OptimismPortalcontract. The relayer is not necessarily the same entity which initiated the withdrawal on L2. These inputs include the withdrawal transaction data, inclusion proofs, and a block number. The block number must be one for which an L2 output root exists, which commits to the withdrawal as registered on L2. - The

OptimismPortalcontract retrieves the output root for the given block number from theL2OutputOracle'sgetL2Output()function, and performs the remainder of the verification process internally. - If proof verification fails, the call reverts. Otherwise the hash is recorded to prevent it from being re-proven. Note that the withdrawal can be proven more than once if the corresponding output root changes.

- After the withdrawal is proven, it enters a 7 day challenge period, allowing time for other network participants to challenge the integrity of the corresponding output root.

- Once the challenge period has passed, a relayer submits a withdrawal finalizing transaction to the

OptimismPortalcontract. The relayer doesn't need to be the same entity that initiated the withdrawal on L2. - The

OptimismPortalcontract receives the withdrawal transaction data and verifies that the withdrawal has both been proven and passed the challenge period. - If the requirements are not met, the call reverts. Otherwise the call is forwarded, and the hash is recorded to prevent it from being replayed.

The L2ToL1MessagePasser Contract

A withdrawal is initiated by calling the L2ToL1MessagePasser contract's initiateWithdrawal function.

The L2ToL1MessagePasser is a simple predeploy contract at 0x4200000000000000000000000000000000000016

which stores messages to be withdrawn.

interface L2ToL1MessagePasser {

event MessagePassed(

uint256 indexed nonce, // this is a global nonce value for all withdrawal messages

address indexed sender,

address indexed target,

uint256 value,

uint256 gasLimit,

bytes data,

bytes32 withdrawalHash

);

event WithdrawerBalanceBurnt(uint256 indexed amount);

function burn() external;

function initiateWithdrawal(address _target, uint256 _gasLimit, bytes memory _data) payable external;

function messageNonce() public view returns (uint256);

function sentMessages(bytes32) view external returns (bool);

}

The MessagePassed event includes all of the data that is hashed and

stored in the sentMessages mapping, as well as the hash itself.

Addresses are not Aliased on Withdrawals

When a contract makes a deposit, the sender's address is aliased. The same is not true of withdrawals, which do not modify the sender's address. The difference is that:

- on L2, the deposit sender's address is returned by the

CALLERopcode, meaning a contract cannot easily tell if the call originated on L1 or L2, whereas - on L1, the withdrawal sender's address is accessed by calling the

l2Sender()function on theOptimismPortalcontract.

Calling l2Sender() removes any ambiguity about which domain the call originated from. Still, developers will need to

recognize that having the same address does not imply that a contract on L2 will behave the same as a contract on L1.

The Optimism Portal Contract

The Optimism Portal serves as both the entry and exit point to the Optimism L2. It is a contract which inherits from the OptimismPortal contract, and in addition provides the following interface for withdrawals:

interface OptimismPortal {

event WithdrawalFinalized(bytes32 indexed withdrawalHash, bool success);

function l2Sender() returns(address) external;

function proveWithdrawalTransaction(

Types.WithdrawalTransaction memory _tx,

uint256 _l2OutputIndex,

Types.OutputRootProof calldata _outputRootProof,

bytes[] calldata _withdrawalProof

) external;

function finalizeWithdrawalTransaction(

Types.WithdrawalTransaction memory _tx

) external;

}

Withdrawal Verification and Finalization

The following inputs are required to prove and finalize a withdrawal:

- Withdrawal transaction data:

nonce: Nonce for the provided message.sender: Message sender address on L2.target: Target address on L1.value: ETH to send to the target.data: Data to send to the target.gasLimit: Gas to be forwarded to the target.

- Proof and verification data:

l2OutputIndex: The index in the L2 outputs where the applicable output root may be found.outputRootProof: Fourbytes32values which are used to derive the output root.withdrawalProof: An inclusion proof for the given withdrawal in the L2ToL1MessagePasser contract.

These inputs must satisfy the following conditions:

- The

l2OutputIndexmust be the index in the L2 outputs that contains the applicable output root. L2OutputOracle.getL2Output(l2OutputIndex)returns a non-zeroOutputProposal.- The keccak256 hash of the

outputRootProofvalues is equal to theoutputRoot. - The

withdrawalProofis a valid inclusion proof demonstrating that a hash of the Withdrawal transaction data is contained in the storage of the L2ToL1MessagePasser contract on L2.

Security Considerations

Key Properties of Withdrawal Verification

-

It should not be possible to 'double spend' a withdrawal, ie. to relay a withdrawal on L1 which does not correspond to a message initiated on L2. For reference, see this writeup of a vulnerability of this type found on Polygon.

-

For each withdrawal initiated on L2 (i.e. with a unique

messageNonce()), the following properties must hold:- It should only be possible to prove the withdrawal once, unless the outputRoot for the withdrawal has changed.

- It should only be possible to finalize the withdrawal once.

- It should not be possible to relay the message with any of its fields modified, ie.

- Modifying the

senderfield would enable a 'spoofing' attack. - Modifying the

target,data, orvaluefields would enable an attacker to dangerously change the intended outcome of the withdrawal. - Modifying the

gasLimitcould make the cost of relaying too high, or allow the relayer to cause execution to fail (out of gas) in thetarget.

- Modifying the

Handling Successfully Verified Messages That Fail When Relayed

If the execution of the relayed call fails in the target contract, it is unfortunately not possible to determine

whether or not it was 'supposed' to fail, and whether or not it should be 'replayable'. For this reason, and to

minimize complexity, we have not provided any replay functionality, this may be implemented in external utility

contracts if desired.

OptimismPortal can send arbitrary messages on L1

The L2ToL1MessagePasser contract's initiateWithdrawal function accepts a _target address and _data bytes,

which is passed to a CALL opcode on L1 when finalizeWithdrawalTransaction is called after the challenge

period. This means that, by design, the OptimismPortal contract can be used to send arbitrary transactions on

the L1, with the OptimismPortal as the msg.sender.

This means users of the OptimismPortal contract should be careful what permissions they grant to the portal.

For example, any ERC20 tokens mistakenly sent to the OptimismPortal contract are essentially lost, as they can

be claimed by anybody that pre-approves transfers of this token out of the portal, using the L2 to initiate the

approval and the L1 to prove and finalize the approval (after the challenge period).

Guaranteed Gas Fee Market

Table of Contents

- Overview

- Gas Stipend

- Default Values

- Limiting Guaranteed Gas

- Rationale for burning L1 Gas

- On Preventing Griefing Attacks

Overview

Deposited transactions are transactions on L2 that are initiated on L1. The gas that they use on L2 is bought on L1 via a gas burn (or a direct payment in the future). We maintain a fee market and hard cap on the amount of gas provided to all deposits in a single L1 block.

The gas provided to deposited transactions is sometimes called "guaranteed gas". The gas provided to deposited transactions is unique in the regard that it is not refundable. It cannot be refunded as it is sometimes paid for with a gas burn and there may not be any ETH left to refund.

The guaranteed gas is composed of a gas stipend, and of any guaranteed gas the user would like to purchase (on L1) on top of that.

Guaranteed gas on L2 is bought in the following manner. An L2 gas price is calculated via an

EIP-1559-style algorithm. The total amount of ETH required to buy that gas is then calculated as

(guaranteed gas * L2 deposit base fee). The contract then accepts that amount of ETH (in a future

upgrade) or (only method right now), burns an amount of L1 gas that corresponds to the L2 cost (L2 cost / L1 base fee). The L2 gas price for guaranteed gas is not synchronized with the base fee on

L2 and will likely be different.

Gas Stipend

To offset the gas spent on the deposit event, we credit gas spent * L1 base fee ETH to the cost

of the L2 gas, where gas spent is the amount of L1 gas spent processing the deposit. If the ETH

value of this credit is greater than the ETH value of the requested guaranteed gas (requested guaranteed gas * L2 gas price), no L1 gas is burnt.

Default Values

| Variable | Value |

|---|---|

MAX_RESOURCE_LIMIT | 20,000,000 |

ELASTICITY_MULTIPLIER | 10 |

BASE_FEE_MAX_CHANGE_DENOMINATOR | 8 |

MINIMUM_BASE_FEE | 1 gwei |

MAXIMUM_BASE_FEE | type(uint128).max |

SYSTEM_TX_MAX_GAS | 1,000,000 |

TARGET_RESOURCE_LIMIT | MAX_RESOURCE_LIMIT / ELASTICITY_MULTIPLIER |

Limiting Guaranteed Gas

The total amount of guaranteed gas that can be bought in a single L1 block must be limited to prevent a denial of service attack against L2 as well as ensure the total amount of guaranteed gas stays below the L2 block gas limit.

We set a guaranteed gas limit of MAX_RESOURCE_LIMIT gas per L1 block and a target of

MAX_RESOURCE_LIMIT / ELASTICITY_MULTIPLIER gas per L1 block. These numbers enabled

occasional large transactions while staying within our target and maximum gas usage on L2.

Because the amount of guaranteed L2 gas that can be purchased in a single block is now limited, we implement an EIP-1559-style fee market to reduce congestion on deposits. By setting the limit at a multiple of the target, we enable deposits to temporarily use more L2 gas at a greater cost.

# Pseudocode to update the L2 deposit base fee and cap the amount of guaranteed gas

# bought in a block. Calling code must handle the gas burn and validity checks on

# the ability of the account to afford this gas.

# prev_base fee is a u128, prev_bought_gas and prev_num are u64s

prev_base_fee, prev_bought_gas, prev_num = <values from previous update>

now_num = block.number

# Clamp the full base fee to a specific range. The minimum value in the range should be around 100-1000

# to enable faster responses in the base fee. This replaces the `max` mechanism in the ethereum 1559

# implementation (it also serves to enable the base fee to increase if it is very small).

def clamp(v: i256, min: u128, max: u128) -> u128:

if v < i256(min):

return min

elif v > i256(max):

return max

else:

return u128(v)

# If this is a new block, update the base fee and reset the total gas

# If not, just update the total gas

if prev_num == now_num:

now_base_fee = prev_base_fee

now_bought_gas = prev_bought_gas + requested_gas

elif prev_num != now_num:

# Width extension and conversion to signed integer math

gas_used_delta = int128(prev_bought_gas) - int128(TARGET_RESOURCE_LIMIT)

# Use truncating (round to 0) division - solidity's default.

# Sign extend gas_used_delta & prev_base_fee to 256 bits to avoid overflows here.

base_fee_per_gas_delta = prev_base_fee * gas_used_delta / TARGET_RESOURCE_LIMIT / BASE_FEE_MAX_CHANGE_DENOMINATOR

now_base_fee_wide = prev_base_fee + base_fee_per_gas_delta

now_base_fee = clamp(now_base_fee_wide, min=MINIMUM_BASE_FEE, max=UINT_128_MAX_VALUE)

now_bought_gas = requested_gas

# If we skipped multiple blocks between the previous block and now update the base fee again.

# This is not exactly the same as iterating the above function, but quite close for reasonable

# gas target values. It is also constant time wrt the number of missed blocks which is important

# for keeping gas usage stable.

if prev_num + 1 < now_num:

n = now_num - prev_num - 1

# Apply 7/8 reduction to prev_base_fee for the n empty blocks in a row.

now_base_fee_wide = now_base_fee * pow(1-(1/BASE_FEE_MAX_CHANGE_DENOMINATOR), n)

now_base_fee = clamp(now_base_fee_wide, min=MINIMUM_BASE_FEE, max=type(uint128).max)

require(now_bought_gas < MAX_RESOURCE_LIMIT)

store_values(now_base_fee, now_bought_gas, now_num)

Rationale for burning L1 Gas

There must be a sybil resistance mechanism for usage of the network. If it is very cheap to get guaranteed gas on L2, then it would be possible to spam the network. Burning a dynamic amount of gas on L1 acts as a sybil resistance mechanism as it becomes more expensive with more demand.

If we collect ETH directly to pay for L2 gas, every (indirect) caller of the deposit function will need to be marked with the payable selector. This won't be possible for many existing projects. Unfortunately this is quite wasteful. As such, we will provide two options to buy L2 gas:

- Burn L1 Gas

- Send ETH to the Optimism Portal (Not yet supported)

The payable version (Option 2) will likely have discount applied to it (or conversely, #1 has a premium applied to it).

For the initial release of bedrock, only #1 is supported.

On Preventing Griefing Attacks

The cost of purchasing all of the deposit gas in every block must be expensive enough to prevent attackers from griefing all deposits to the network. An attacker would observe a deposit in the mempool and frontrun it with a deposit that purchases enough gas such that the other deposit reverts. The smaller the max resource limit is, the easier this attack is to pull off. This attack is mitigated by having a large resource limit as well as a large elasticity multiplier. This means that the target resource usage is kept small, giving a lot of room for the deposit base fee to rise when the max resource limit is being purchased.

This attack should be too expensive to pull off in practice, but if an extremely wealthy adversary does decide to grief network deposits for an extended period of time, efforts will be placed to ensure that deposits are able to be processed on the network.

L2 Output Root Proposals Specification

Table of Contents

- Overview

- Proposing L2 Output Commitments

- L2 Output Commitment Construction

- L2 Output Oracle Smart Contract

- Security Considerations

Overview

After processing one or more blocks the outputs will need to be synchronized with the settlement layer (L1) for trustless execution of L2-to-L1 messaging, such as withdrawals. These output proposals act as the bridge's view into the L2 state. Actors called "Proposers" submit the output roots to the settlement layer (L1) and can be contested with a fault proof, with a bond at stake if the proof is wrong. The op-proposer in one such implementation of a proposer.

Note: Fault proofs on Optimism are not fully specified at this time. Although fault proof construction and verification is implemented in Cannon, the fault proof game specification and integration of a output-root challenger into the rollup-node are part of later specification milestones.

Proposing L2 Output Commitments

The proposer's role is to construct and submit output roots, which are commitments to the L2's state,

to the L2OutputOracle contract on L1 (the settlement layer). To do this, the proposer periodically

queries the rollup node for the latest output root derived from the latest

finalized L1 block. It then takes the output root and

submits it to the L2OutputOracle contract on the settlement layer (L1).

L2OutputOracle v1.0.0

The submission of output proposals is permissioned to a single account. It is expected that this account will continue to submit output proposals over time to ensure that user withdrawals do not halt.

The L2 output proposer is expected to submit output roots on a deterministic

interval based on the configured SUBMISSION_INTERVAL in the L2OutputOracle. The larger

the SUBMISSION_INTERVAL, the less often L1 transactions need to be sent to the L2OutputOracle

contract, but L2 users will need to wait a bit longer for an output root to be included in L1 (the settlement layer)

that includes their intention to withdraw from the system.

The honest op-proposer algorithm assumes a connection to the L2OutputOracle contract to know

the L2 block number that corresponds to the next output proposal that must be submitted. It also

assumes a connection to an op-node to be able to query the optimism_syncStatus RPC endpoint.

import time

while True:

next_checkpoint_block = L2OutputOracle.nextBlockNumber()

rollup_status = op_node_client.sync_status()

if rollup_status.finalized_l2.number >= next_checkpoint_block:

output = op_node_client.output_at_block(next_checkpoint_block)

tx = send_transaction(output)

time.sleep(poll_interval)

A CHALLENGER account can delete multiple output roots by calling the deleteL2Outputs() function

and specifying the index of the first output to delete, this will also delete all subsequent outputs.

L2 Output Commitment Construction

The output_root is a 32 byte string, which is derived based on the a versioned scheme:

output_root = keccak256(version_byte || payload)

where:

-

version_byte(bytes32) a simple version string which increments anytime the construction of the output root is changed. -

payload(bytes) is a byte string of arbitrary length.

In the initial version of the output commitment construction, the version is bytes32(0), and the payload is defined

as:

payload = state_root || withdrawal_storage_root || latest_block_hash

where:

-

The

latest_block_hash(bytes32) is the block hash for the latest L2 block. -

The

state_root(bytes32) is the Merkle-Patricia-Trie (MPT) root of all execution-layer accounts. This value is frequently used and thus elevated closer to the L2 output root, which removes the need to prove its inclusion in the pre-image of thelatest_block_hash. This reduces the merkle proof depth and cost of accessing the L2 state root on L1. -

The

withdrawal_storage_root(bytes32) elevates the Merkle-Patricia-Trie (MPT) root of the Message Passer contract storage. Instead of making an MPT proof for a withdrawal against the state root (proving first the storage root of the L2toL1MessagePasser against the state root, then the withdrawal against that storage root), we can prove against the L2toL1MessagePasser's storage root directly, thus reducing the verification cost of withdrawals on L1.After Isthmus hard fork, the

withdrawal_storage_rootis present in the block header aswithdrawalsRootand can be used directly, instead of computing the storage root of the L2toL1MessagePasser contract.Similarly, if Isthmus hard fork is active at the genesis block, the

withdrawal_storage_rootis present in the block header aswithdrawalsRoot.

L2 Output Oracle Smart Contract

L2 blocks are produced at a constant rate of L2_BLOCK_TIME (2 seconds).

A new L2 output MUST be appended to the chain once per SUBMISSION_INTERVAL which is based on a number of blocks.

The exact number is yet to be determined, and will depend on the design of the fault proving game.

The L2 Output Oracle contract implements the following interface:

/**

* @notice The number of the first L2 block recorded in this contract.

*/

uint256 public startingBlockNumber;

/**

* @notice The timestamp of the first L2 block recorded in this contract.

*/

uint256 public startingTimestamp;

/**

* @notice Accepts an L2 outputRoot and the timestamp of the corresponding L2 block. The

* timestamp must be equal to the current value returned by `nextTimestamp()` in order to be

* accepted.

* This function may only be called by the Proposer.

*

* @param _l2Output The L2 output of the checkpoint block.

* @param _l2BlockNumber The L2 block number that resulted in _l2Output.

* @param _l1Blockhash A block hash which must be included in the current chain.